Since writing the Drowning in JavaScript post I’ve been meaning to take a stab at automating that analysis and seeing if I could generate some other insights. This weekend I finally got around to writing a quick PhantomJS script to load the top 100 Alexa sites and capture each of the linked resources as well as their type. The resulting data set contains the time it took the entire page to load as well as the content type for each of the linked files. After loading these two datasets into R and doing a few simple transformations we can get some interesting results.

Average load time. To get a general sense of the data this plots the average time it took to load each URL. The interesting piece here is that multiple foreign sites take a while to load (163.com, qq.com, gmw.cn, ..) - I suspect a big reason is that there's quite a bit of latency since I'm based in the US. Another observation is that many news sites tended to load slowly (huffingpost.com, cnn.com, cnet.com). The Google sites loaded extremely quickly (all less than 1 sec) as did Wikipedia.

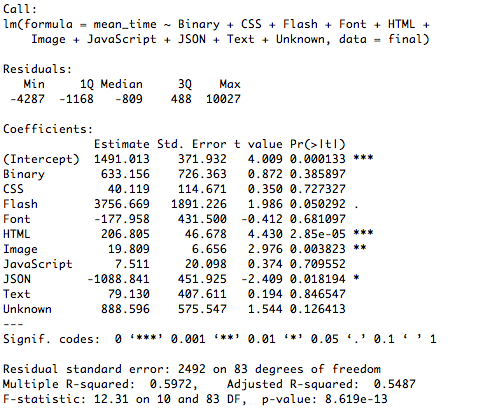

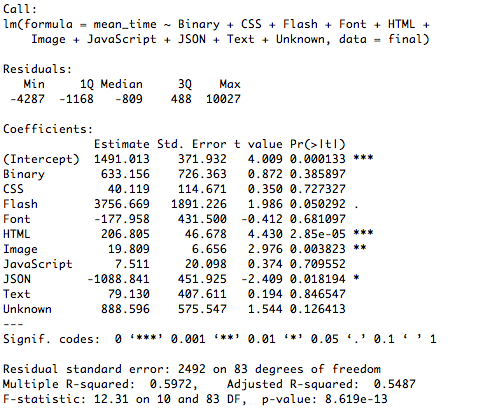

Multiple linear regression. And just for fun we can run a regression to see whether a particular file type leads to significantly worse load than others. I was hoping to show that having a lot of JavaScript hurts performance but that doesn't seem to be the case. I suspect it's due to the innate time differences it takes to load some sites (in particular sites outside the US) vs others.

As usual, the code’s up on GitHub.